Breast cancer is one of the most common cancers affecting women worldwide. Early detection through various diagnostic methods significantly improves treatment success. Machine learning can play a vital role in aiding diagnosis by predicting the likelihood of malignancy based on patient data. In this blog, we will explore the Breast Cancer dataset using Python's sklearn library, a popular tool for machine learning.

Breast Cancer Dataset in Python

The Breast Cancer dataset, also known as the Breast Cancer Wisconsin dataset, is a classic dataset used for binary classification tasks. It consists of 569 instances, each with 30 numeric features that describe the characteristics of cell nuclei present in the images. These features are:

Radius

Texture

Perimeter

Area

Smoothness

Compactness

Concavity

Concave points

Symmetry

Fractal dimension

Each of these features has a mean, standard error, and worst (largest) value recorded. The target variable is binary, indicating whether the cancer is benign (0) or malignant (1).

Loading Breast Cancer Dataset from sklearn

Let's start by loading the dataset using sklearn's built-in function.

from sklearn.datasets import load_breast_cancer

import pandas as pd

# Load the dataset

data = load_breast_cancer()

# Create a DataFrame for easy manipulation

df = pd.DataFrame(data.data, columns=data.feature_names)

df['target'] = data.target

# Display the first few rows of the dataset

print(df.head())

Output of the above code:

mean radius mean texture mean perimeter mean area mean smoothness \

0 17.99 10.38 122.80 1001.0 0.11840

1 20.57 17.77 132.90 1326.0 0.08474

2 19.69 21.25 130.00 1203.0 0.10960

3 11.42 20.38 77.58 386.1 0.14250

4 20.29 14.34 135.10 1297.0 0.10030

mean compactness mean concavity mean concave points mean symmetry \

0 0.27760 0.3001 0.14710 0.2419

1 0.07864 0.0869 0.07017 0.1812

2 0.15990 0.1974 0.12790 0.2069

3 0.28390 0.2414 0.10520 0.2597

4 0.13280 0.1980 0.10430 0.1809

mean fractal dimension ... worst texture worst perimeter worst area \

0 0.07871 ... 17.33 184.60 2019.0

1 0.05667 ... 23.41 158.80 1956.0

2 0.05999 ... 25.53 152.50 1709.0

3 0.09744 ... 26.50 98.87 567.7

4 0.05883 ... 16.67 152.20 1575.0

worst smoothness worst compactness worst concavity worst concave points \

0 0.1622 0.6656 0.7119 0.2654

1 0.1238 0.1866 0.2416 0.1860

2 0.1444 0.4245 0.4504 0.2430

3 0.2098 0.8663 0.6869 0.2575

4 0.1374 0.2050 0.4000 0.1625

worst symmetry worst fractal dimension target

0 0.4601 0.11890 0

1 0.2750 0.08902 0

2 0.3613 0.08758 0

3 0.6638 0.17300 0

4 0.2364 0.07678 0 Data Exploration and Visualization

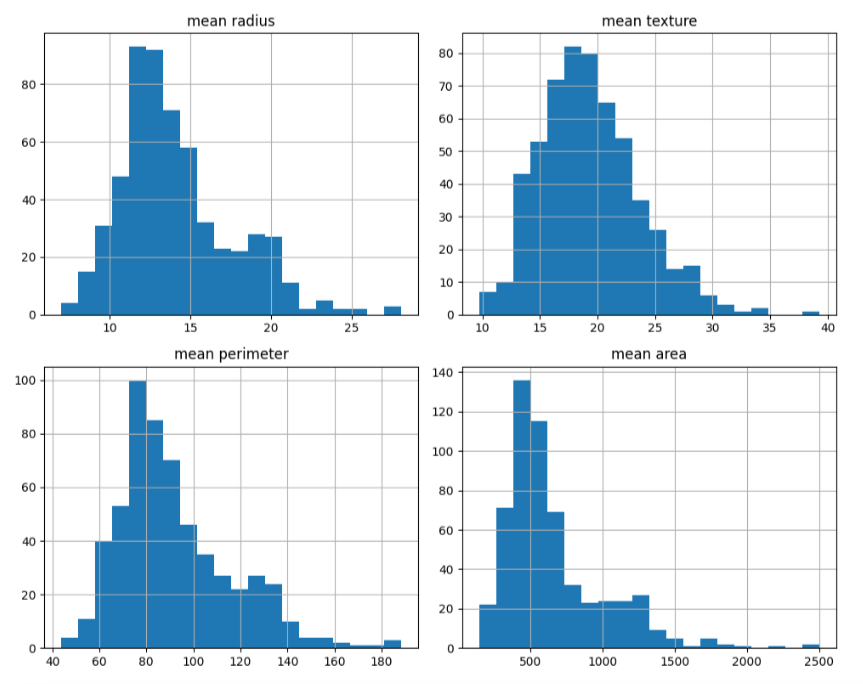

Data exploration and visualization are crucial steps in understanding the distribution and relationships within a dataset. Using libraries like Seaborn and Matplotlib, we can create plots such as histograms, count plots, and pair plots to visually inspect feature distributions and correlations. These visualizations help identify patterns, outliers, and potential issues in the data, guiding further analysis and model development. Let's explore the dataset by checking the distribution of the target variable and some features.

import seaborn as sns

import matplotlib.pyplot as plt

# Distribution of target variable

sns.histplot(df['target'], kde=True)

plt.title('Distribution of Target Variable')

plt.show()

Output of the above code:

# Distribution of a few features

features = ['mean radius', 'mean texture', 'mean perimeter', 'mean area']

df[features].hist(figsize=(10, 8), bins=20)

plt.tight_layout()

plt.show()

Output of the above code:

Data Preprocessing in Python - sklearn

Data preprocessing in sklearn involves preparing the dataset for modeling by addressing issues like missing values, scaling features, and encoding categorical variables. It often includes splitting the data into training and testing sets to evaluate model performance. Essential tools like StandardScaler and LabelEncoder are commonly used to standardize data and convert labels to numerical format, ensuring consistent and accurate model training. We are going to keep it simple, before feeding the data into a machine learning model, we will preprocess it. This includes splitting the dataset into training and testing sets and scaling the features.

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(df[data.feature_names], df['target'], test_size=0.2, random_state=42)

# Scale the features

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

Building a Classification Model - Random Forest

Random Forest in scikit-learn is an ensemble learning method that combines multiple decision trees to improve prediction accuracy and control overfitting. It works by constructing a multitude of decision trees during training and outputting the mode of the classes (classification) or mean prediction (regression) of the individual trees. This method is robust against overfitting, particularly with large datasets, and is versatile for both classification and regression tasks. Now, let's build a simple classification model using a Random Forest classifier. We'll train the model on the training set and evaluate its performance on the testing set.

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, accuracy_score

# Initialize the classifier

clf = RandomForestClassifier(random_state=42)

# Train the classifier

clf.fit(X_train, y_train)

# Predict the labels for the test set

y_pred = clf.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {accuracy * 100:.2f}%')

# Classification report

print(classification_report(y_test, y_pred))

Output of the above code:

Accuracy: 96.49%

precision recall f1-score support

0 0.98 0.93 0.95 43

1 0.96 0.99 0.97 71

accuracy 0.96 114

macro avg 0.97 0.96 0.96 114

weighted avg 0.97 0.96 0.96 114Feature Importance

Feature importance is a technique used to determine which features in a dataset contribute the most to the predictions made by a model. In the context of a Random Forest classifier, feature importance can be assessed by measuring the decrease in impurity or by evaluating the accuracy drop when a feature is removed. Understanding feature importance helps identify key factors influencing model decisions, providing insights into the underlying data and guiding feature selection for improved model performance. Understanding which features contribute the most to the prediction is valuable. We can inspect the feature importances determined by the Random Forest model.

import numpy as np

# Get feature importances

importances = clf.feature_importances_

indices = np.argsort(importances)[::-1]

# Print feature ranking

print("Feature ranking:")

for f in range(X_train.shape[1]):

print(f"{f + 1}. Feature {data.feature_names[indices[f]]} ({importances[indices[f]]})")

Output of the above code:

Feature ranking:

1. Feature worst area (0.15389236463205394)

2. Feature worst concave points (0.14466326620735528)

3. Feature mean concave points (0.10620998844591638)

4. Feature worst radius (0.07798687515738047)

5. Feature mean concavity (0.06800084191430111)

6. Feature worst perimeter (0.06711483267839194)

7. Feature mean perimeter (0.053269746128179675)

8. Feature mean radius (0.048703371737755234)

9. Feature mean area (0.04755500886018552)

10. Feature worst concavity (0.031801595740040434)

11. Feature area error (0.022406960160458473)

12. Feature worst texture (0.021749011006763207)

13. Feature worst compactness (0.020266035899623565)

14. Feature radius error (0.02013891719419153)

15. Feature mean compactness (0.013944325074050485)

16. Feature mean texture (0.013590877656998469)

17. Feature perimeter error (0.01130301388178435)

18. Feature worst smoothness (0.010644205147280952)

19. Feature worst symmetry (0.010120176131974357)

20. Feature concavity error (0.009385832251596627)

21. Feature mean smoothness (0.007285327830663239)

22. Feature fractal dimension error (0.00532145634222884)

23. Feature compactness error (0.005253215538990106)

24. Feature worst fractal dimension (0.005210118545497296)

25. Feature texture error (0.004723988073894702)

26. Feature smoothness error (0.004270910110504497)

27. Feature symmetry error (0.004018418617722808)

28. Feature mean fractal dimension (0.0038857721093275)

29. Feature mean symmetry (0.003770291819290666)

30. Feature concave points error (0.003513255105598506)Conclusion

In this blog, we explored the Breast Cancer dataset using Python and scikit-learn. We loaded the data, performed basic data exploration and visualization, preprocessed the data, and built a Random Forest classifier to predict whether a tumor is benign or malignant. The model achieved a high accuracy, demonstrating the potential of machine learning in aiding medical diagnosis. Additionally, we examined feature importances to gain insights into which features were most influential in the model's predictions.

By leveraging such datasets and machine learning techniques, we can enhance diagnostic processes and potentially save lives through early and accurate detection.

Comments