Generative Adversarial Networks, commonly known as GANs, have taken the machine learning world by storm since their introduction by Ian Goodfellow and his team in 2014. GANs have revolutionized how we generate data, making it possible to create realistic images, music, and even text from scratch. In this blog, we'll delve into the fundamentals of GANs, how they work, and explore some of their most exciting applications.

What Are Generative Adversarial Networks (GANs)?

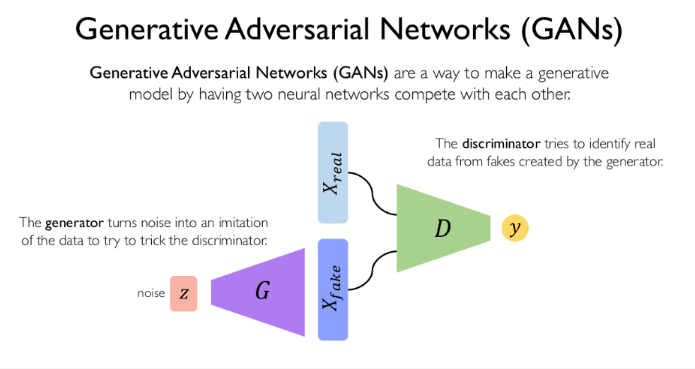

GANs are a class of machine learning models designed to generate new data samples that resemble a given dataset. GANs consist of two neural networks: a generator and a discriminator, which are pitted against each other in a kind of adversarial game. The generator's role is to produce data that mimics a given dataset, such as images, while the discriminator tries to distinguish between the real data and the fake data generated by the generator. This interplay drives both networks to improve; the generator becomes better at producing realistic data, while the discriminator gets better at detecting fakes. Over time, the generator learns to produce data that is indistinguishable from real data, according to the discriminator. GANs have become particularly popular for tasks such as image synthesis, data augmentation, and even creating deepfakes. Despite their potential, GANs can be challenging to train, requiring careful tuning and significant computational resources, but their impact on fields like computer vision, art, and beyond is undeniable.

.

Generator: The generator's role is to produce fake data that resembles the real data. It starts by taking a random noise vector as input and transforms it into a data sample.

Discriminator: The discriminator, on the other hand, attempts to distinguish between real data (from the actual dataset) and fake data (produced by the generator). It outputs a probability value indicating whether a given sample is real or fake.

How Generative Adversarial Networks (GANs) Work?

Generative Adversarial Networks (GANs) operate through a competition between two neural networks: the generator and the discriminator. The generator creates fake data by transforming random noise into data samples that resemble the real dataset. Meanwhile, the discriminator evaluates both real and fake data, attempting to differentiate between them. As training progresses, the generator aims to produce increasingly convincing fake data to fool the discriminator, while the discriminator strives to improve its ability to spot the fakes. This adversarial process continues until the generator becomes proficient at creating data that is nearly indistinguishable from the real thing. The magic of GANs lies in the adversarial process between the generator and discriminator. Here's how it works:

Initialization: Both the generator and discriminator are initialized with random weights.

Training Loop:

The generator takes a noise vector (often sampled from a Gaussian distribution) and generates a data sample.

This generated data is passed to the discriminator along with real data from the training set.

The discriminator attempts to classify the samples as real or fake.

The discriminator's output is used to calculate two losses: one for the generator and one for the discriminator.

The generator's goal is to minimize the loss by fooling the discriminator into thinking the fake data is real.

The discriminator's goal is to maximize its accuracy in distinguishing between real and fake data.

This process repeats until the generator produces data that is indistinguishable from the real data, according to the discriminator.

Applications of Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) have a wide range of applications, particularly in fields requiring data generation and transformation. They are commonly used in image generation, where they create realistic faces, landscapes, or artwork from scratch. GANs also excel in image-to-image translation, such as converting sketches into detailed images or colorizing black-and-white photos. Additionally, they are used for enhancing image resolution, generating synthetic training data, and even creating deepfakes. Beyond images, GANs are also employed in generating video frames, audio synthesis, and text-to-image translation, showcasing their versatility in creative and practical domains. GANs have found applications in a wide range of fields, including:

Image Generation: GANs are widely used to generate high-quality images, such as faces, landscapes, and even artwork. Examples include DeepArt and NVIDIA's GauGAN.

Image-to-Image Translation: GANs can transform images from one domain to another. For example, they can turn sketches into realistic photos, convert black-and-white images to color, or even change the seasons in a landscape photo.

Text-to-Image Synthesis: GANs can generate images based on textual descriptions. This is particularly useful in applications like creating images for stories or designing products based on customer descriptions.

Super-Resolution: GANs can enhance the resolution of images, making them sharper and more detailed. This has significant applications in fields like medical imaging and satellite imagery.

Video Generation: GANs can be used to generate realistic video frames, making them useful in video compression, virtual reality, and creating synthetic training data for machine learning models.

Generative Adversarial Networks (GANs) Implementation in Python

This code will generate images resembling handwritten digits after training. You can adjust the number of epochs to get better results.

import tensorflow as tf

from tensorflow.keras import layers

import numpy as np

import matplotlib.pyplot as plt

# Load and preprocess the MNIST dataset

(x_train, ), (, ) = tf.keras.datasets.mnist.loaddata()

x_train = (x_train - 127.5) / 127.5 # Normalize to [-1, 1]

x_train = x_train.reshape(x_train.shape[0], 28, 28, 1).astype('float32')

# Set up parameters

BUFFER_SIZE = 60000

BATCH_SIZE = 256

NOISE_DIM = 100

EPOCHS = 50000

# Create a TensorFlow dataset

train_dataset = tf.data.Dataset.from_tensor_slices(x_train).shuffle(BUFFER_SIZE).batch(BATCH_SIZE)

# Build the generator

def make_generator_model():

model = tf.keras.Sequential()

model.add(layers.Dense(7*7*256, use_bias=False, input_shape=(NOISE_DIM,)))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Reshape((7, 7, 256)))

model.add(layers.Conv2DTranspose(128, (5, 5), strides=(1, 1), padding='same', use_bias=False))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(64, (5, 5), strides=(2, 2), padding='same', use_bias=False))

model.add(layers.BatchNormalization())

model.add(layers.LeakyReLU())

model.add(layers.Conv2DTranspose(1, (5, 5), strides=(2, 2), padding='same', use_bias=False, activation='tanh'))

return model

generator = make_generator_model()

# Build the discriminator

def make_discriminator_model():

model = tf.keras.Sequential()

model.add(layers.Conv2D(64, (5, 5), strides=(2, 2), padding='same', input_shape=[28, 28, 1]))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(128, (5, 5), strides=(2, 2), padding='same'))

model.add(layers.LeakyReLU())

model.add(layers.Dropout(0.3))

model.add(layers.Flatten())

model.add(layers.Dense(1))

return model

discriminator = make_discriminator_model()

# Define the loss and optimizers

cross_entropy = tf.keras.losses.BinaryCrossentropy(from_logits=True)

def discriminator_loss(real_output, fake_output):

real_loss = cross_entropy(tf.ones_like(real_output), real_output)

fake_loss = cross_entropy(tf.zeros_like(fake_output), fake_output)

return real_loss + fake_loss

def generator_loss(fake_output):

return cross_entropy(tf.ones_like(fake_output), fake_output)

generator_optimizer = tf.keras.optimizers.Adam(1e-4)

discriminator_optimizer = tf.keras.optimizers.Adam(1e-4)

# Training step

@tf.function

def train_step(images):

noise = tf.random.normal([BATCH_SIZE, NOISE_DIM])

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

generated_images = generator(noise, training=True)

real_output = discriminator(images, training=True)

fake_output = discriminator(generated_images, training=True)

gen_loss = generator_loss(fake_output)

disc_loss = discriminator_loss(real_output, fake_output)

gradients_of_generator = gen_tape.gradient(gen_loss, generator.trainable_variables)

gradients_of_discriminator = disc_tape.gradient(disc_loss, discriminator.trainable_variables)

generator_optimizer.apply_gradients(zip(gradients_of_generator, generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(gradients_of_discriminator, discriminator.trainable_variables))

# Training loop

def train(dataset, epochs):

for epoch in range(epochs):

for image_batch in dataset:

train_step(image_batch)

if epoch % 10 == 0:

generate_and_save_images(generator, epoch + 1)

def generate_and_save_images(model, epoch):

noise = tf.random.normal([16, NOISE_DIM])

generated_images = model(noise, training=False)

fig = plt.figure(figsize=(4, 4))

for i in range(generated_images.shape[0]):

plt.subplot(4, 4, i + 1)

plt.imshow(generated_images[i, :, :, 0] * 127.5 + 127.5, cmap='gray')

plt.axis('off')

plt.show()

# Train the GAN

train(train_dataset, EPOCHS)

Conclusion

Generative Adversarial Networks (GANs) represent a powerful and innovative approach to machine learning, capable of generating highly realistic data that mimics the real world. From creating stunning images and transforming them in various ways to enhancing the resolution of low-quality data, GANs have found applications across a diverse range of fields. While they come with challenges such as training instability and computational demands, their potential is vast and continually expanding. Whether you're a researcher, developer, or enthusiast, understanding and experimenting with GANs opens up exciting possibilities in artificial intelligence. As the technology evolves, GANs will likely play an increasingly crucial role in driving forward new advancements in AI and creative fields.

Comments