Implementing Neural Networks on the CIFAR-10 Dataset Using TensorFlow in Python

- Samul Black

- Aug 13, 2024

- 7 min read

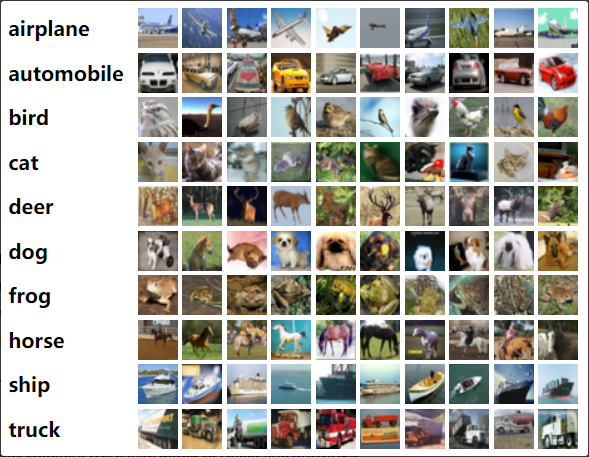

The CIFAR-10 dataset is a popular benchmark dataset in the field of machine learning and computer vision. It consists of 60,000 32x32 color images categorized into 10 different classes, such as airplanes, cars, and birds. In this blog, we'll explore how to implement neural networks using TensorFlow to classify these images effectively. We'll cover the essential steps from loading and preparing the dataset to building, training, and evaluating a convolutional neural network (CNN).

What are Neural Networks?

Neural networks are a class of machine learning models inspired by the structure and function of the human brain. They are designed to recognize patterns, make predictions, and perform various tasks by learning from data. A neural network is composed of layers of interconnected nodes, or neurons. These layers are typically organized into three main types:

Input Layer: The first layer that receives the raw data or features. Each neuron in this layer represents a feature or attribute of the input data.

Hidden Layers: One or more layers between the input and output layers. Neurons in hidden layers perform computations on the inputs they receive. These layers extract and learn features from the data through weighted connections.

Output Layer: The final layer that produces the network's output, such as a prediction or classification. Each neuron in this layer corresponds to a possible output or class.

Neural networks learn by adjusting the weights of connections between neurons through a process called training. This involves:

Forward Propagation: Data is passed through the network from the input layer to the output layer. Each neuron computes a weighted sum of its inputs, applies an activation function, and passes the result to the next layer.

Activation Function: Functions like ReLU (Rectified Linear Unit) or sigmoid introduce non-linearity into the model, allowing it to learn complex patterns. The activation function determines whether a neuron should be activated or not.

Loss Function: Measures how well the network's predictions match the actual results. Common loss functions include Mean Squared Error for regression tasks and Cross-Entropy Loss for classification tasks.

Backpropagation: A method for training the network by adjusting weights to minimize the loss function. It involves calculating the gradient of the loss function with respect to each weight and updating the weights accordingly using optimization algorithms like Gradient Descent.

Implementing Neural Networks on the CIFAR-10 Dataset Using TensorFlow in Python

Implementing neural networks on the CIFAR-10 dataset using TensorFlow in Python involves several key steps to develop an effective image classification model. First, the dataset is loaded and preprocessed, including normalizing pixel values to ensure that the neural network can learn effectively. A convolutional neural network (CNN) is then constructed using TensorFlow's high-level Keras API, which includes layers such as convolutional layers for feature extraction, max-pooling layers for dimensionality reduction, and dense layers for classification. The model is trained on the training data and validated using the test data to ensure it generalizes well. Visualization of training and validation metrics helps monitor performance and adjust hyperparameters as needed. This process highlights TensorFlow’s capabilities in handling complex image data and provides a practical approach to building and evaluating deep learning models for computer vision tasks.

Step 1: Setting Up Your Environment

To get started, you'll need to install TensorFlow and other essential libraries. You can install them using pip:

pip install tensorflow numpy matplotlibStep 2: Loading, Preprocessing & Visualize the CIFAR-10 Dataset

TensorFlow provides convenient functions to load and preprocess the CIFAR-10 dataset. Here’s how you can load the dataset and normalize it:

import tensorflow as tf

from tensorflow.keras.datasets import cifar10

# Load the CIFAR-10 dataset

(train_images, train_labels), (test_images, test_labels) = cifar10.load_data()

# Normalize pixel values to be between 0 and 1

train_images, test_images = train_images / 255.0, test_images / 255.0

# Define class names

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

# Plot some images

def plot_images(images, labels, class_names):

plt.figure(figsize=(15, 15))

for i in range(25):

plt.subplot(5, 5, i + 1)

plt.imshow(images[i])

plt.title(class_names[labels[i][0]])

plt.axis('off')

plt.show()

plot_images(train_images, train_labels, class_names)

Output for the code above:

Step 3: Building the Convolutional Neural Network (CNN)

Convolutional Neural Networks are particularly well-suited for image classification tasks. Here’s a simple CNN model using TensorFlow:

from tensorflow.keras import layers, models

# Build the CNN model

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Summary

model.summary()

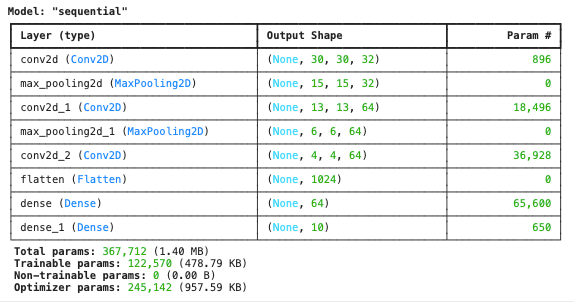

Output for the code above:

Convolutional Layers: Extract features from images.

MaxPooling Layers: Reduce dimensionality while preserving features.

Dense Layers: Perform classification based on extracted features.

Activation Function: Softmax is used in the output layer for multi-class classification.

Step 4: Training the Model

With the model built, it’s time to train it using the training dataset:

# Model Training

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

Output for the code above:

Epoch 1/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 79s 49ms/step - accuracy: 0.3444 - loss: 1.7613 - val_accuracy: 0.5215 - val_loss: 1.3080

Epoch 2/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 76s 49ms/step - accuracy: 0.5646 - loss: 1.2207 - val_accuracy: 0.5978 - val_loss: 1.1111

Epoch 3/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 82s 49ms/step - accuracy: 0.6282 - loss: 1.0516 - val_accuracy: 0.6190 - val_loss: 1.0786

Epoch 4/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 81s 48ms/step - accuracy: 0.6641 - loss: 0.9466 - val_accuracy: 0.6622 - val_loss: 0.9737

Epoch 5/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 84s 49ms/step - accuracy: 0.6950 - loss: 0.8698 - val_accuracy: 0.6819 - val_loss: 0.9084

Epoch 6/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 87s 52ms/step - accuracy: 0.7199 - loss: 0.7948 - val_accuracy: 0.6912 - val_loss: 0.8927

Epoch 7/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 75s 48ms/step - accuracy: 0.7407 - loss: 0.7426 - val_accuracy: 0.7030 - val_loss: 0.8782

Epoch 8/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 78s 50ms/step - accuracy: 0.7572 - loss: 0.6901 - val_accuracy: 0.7094 - val_loss: 0.8509

Epoch 9/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 77s 49ms/step - accuracy: 0.7659 - loss: 0.6600 - val_accuracy: 0.6976 - val_loss: 0.8982

Epoch 10/10

1563/1563 ━━━━━━━━━━━━━━━━━━━━ 80s 48ms/step - accuracy: 0.7807 - loss: 0.6208 - val_accuracy: 0.7075 - val_loss: 0.8749Epochs: Number of times the model will iterate over the entire training dataset.

Validation Data: Used to evaluate the model’s performance during training.

Step 5: Evaluating the Model

After training, evaluate the model’s performance on the test dataset to see how well it generalizes to new data:

# Model Evaluation

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print(f'\nTest accuracy: {test_acc:.4f}')

Output for the above code:

313/313 - 5s - 16ms/step - accuracy: 0.7075 - loss: 0.8749

Test accuracy: 0.7075Step 6: Visualizing Training Progress

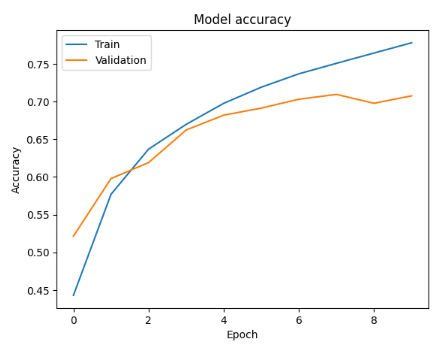

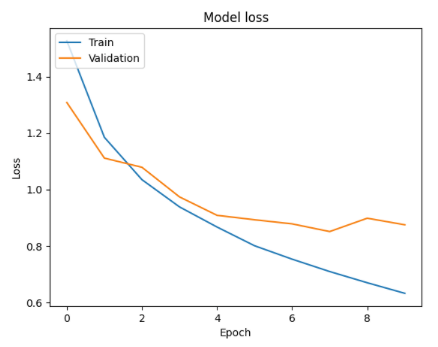

Visualizing the training and validation accuracy can provide insights into the model’s learning process:

import matplotlib.pyplot as plt

# Plot training & validation accuracy values

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()

Output for the above code:

# Plot training & validation loss values

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()

Output for the above code:

Full code for Implementing Neural Networks on the CIFAR-10 Dataset Using TensorFlow in Python

The full code for implementing neural networks on the CIFAR-10 dataset using TensorFlow in Python involves loading the dataset, normalizing the images, and defining a Convolutional Neural Network (CNN) with convolutional, pooling, and dense layers. After compiling the model with an appropriate optimizer and loss function, it is trained on the CIFAR-10 training data and evaluated on the test data. Visualization of training progress through accuracy and loss plots provides insights into the model’s performance. This code demonstrates a complete pipeline from data preparation to model evaluation, leveraging TensorFlow's powerful tools for image classification.

# Import necessary libraries

import tensorflow as tf

from tensorflow.keras.datasets import cifar10

from tensorflow.keras import layers, models

import matplotlib.pyplot as plt

# Load the CIFAR-10 dataset

(train_images, train_labels), (test_images, test_labels) = cifar10.load_data()

# Normalize pixel values to be between 0 and 1

train_images, test_images = train_images / 255.0, test_images / 255.0

# Define class names

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

# Plot some images

def plot_images(images, labels, class_names):

plt.figure(figsize=(15, 15))

for i in range(25):

plt.subplot(5, 5, i + 1)

plt.imshow(images[i])

plt.title(class_names[labels[i][0]])

plt.axis('off')

plt.show()

plot_images(train_images, train_labels, class_names)

# Build the CNN model

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Summary

model.summary()

# Model Training

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

# Model Evaluation

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print(f'\nTest accuracy: {test_acc:.4f}')

# Plot training & validation accuracy values

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()

# Plot training & validation loss values

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()

Conclusion

In conclusion, implementing neural networks on the CIFAR-10 dataset using TensorFlow in Python offers a comprehensive introduction to image classification with deep learning. By leveraging TensorFlow's robust tools, we can efficiently load and preprocess the CIFAR-10 dataset, build and train a convolutional neural network (CNN), and evaluate its performance on test data. This process not only highlights the effectiveness of CNNs in handling image data but also provides practical experience with TensorFlow's high-level APIs. The ability to visualize training metrics further enhances our understanding of model performance and optimization. Overall, this approach serves as a solid foundation for exploring more advanced deep learning techniques and tackling complex computer vision tasks.